Background

Helpshift is a customer service SAAS platform that enables you to provide a self-help experience(Help Center) & a real-time, conversational support experience on different platforms and devices.

Help Center's one of the main features is to display Frequently Asked Questions (FAQs). Frequently asked questions have properties similar to a blog. Just like a blog has a title, rich content, tags, search keywords, the ability to publish/unpublish, and the ability to schedule the publishing of posts, FAQ also has similar features. Having said that some intricacies need to be addressed specifically. One of the examples is support for the localization of FAQs. which means, we had to support translations for the FAQ in 185 languages. FAQs are rich content, meaning they can have images, videos, and rich textual properties hence they are stored as HTML documents.

In our primary database, Mongo, the FAQ HTML documents were stored alongside other fields such as title, search keywords, published status, schedules, and more. With support for 185 different languages, there can be a maximum of 185 HTML documents.

However, this setup started causing issues. Queries became slower, and memory consumption on Mongo nodes increased, affecting the rest of the application.

Causes

During the postmortem analysis, we identified three main issues:

Lack of size limit on HTML documents.

No restrictions on elements that can be added, such as Base64 images.

Denormalised schema design.

Concerns

Addressing issues such as adding a limit on FAQ size and reaching a consensus on the types of supported HTML elements required discussions with the customer and various stakeholders within the organization. Overall, this process was expected to be time-consuming.

Options

Based on our findings, we decided to clean up faqs collections by moving HTML content out of the database. We evaluated AWS s3, FSx, and EFS as the options.

S3 wasn’t a good option because of higher latencies. FSx lustre would have been an overkill for this use case. EFS (Elastic File System) seems like a good option as it has lower read latency(microseconds) and lower write latency (a couple of milliseconds). Also, one of the stated use cases of EFS is Content Management System so it made sense to use it for managing FAQ contents.

After careful consideration, we opted for AWS Elastic File System as it aligns well with our requirements:

Sub-millisecond response time for both reads and writes.

High availability as it is a distributed file system with replicas and backup facilities.

Cost-efficient

Solution

There needed a way to co-relate files on EFS with the entry in DB. At a time, there could be only one HTML document corresponding to individual translations of the FAQ. For this, we decided to consider properties of FAQ like domain, FAQ ID, language code, and updated-at.

The directory structure we finalized looked somewhat like this:

/faqs/<domain>/<faq-id>/<lang-code>-<updated-at>.html

Adding updated-at to the file path was mainly required to handle atomic writes

This is how the directory structure would look after migrating existing FAQ documents to the file system.

- faqs

- domain-1

- faq-id-1

- en-<updated_at_t1>.html

- en-<updated_at_t2>.html

- faq-id-2

- en-<updated_at>.html

- jp-<updated_at>.html

- domain-2

- faq-id-1

- en-<updated_at>.html

- es-<updated_at>.html

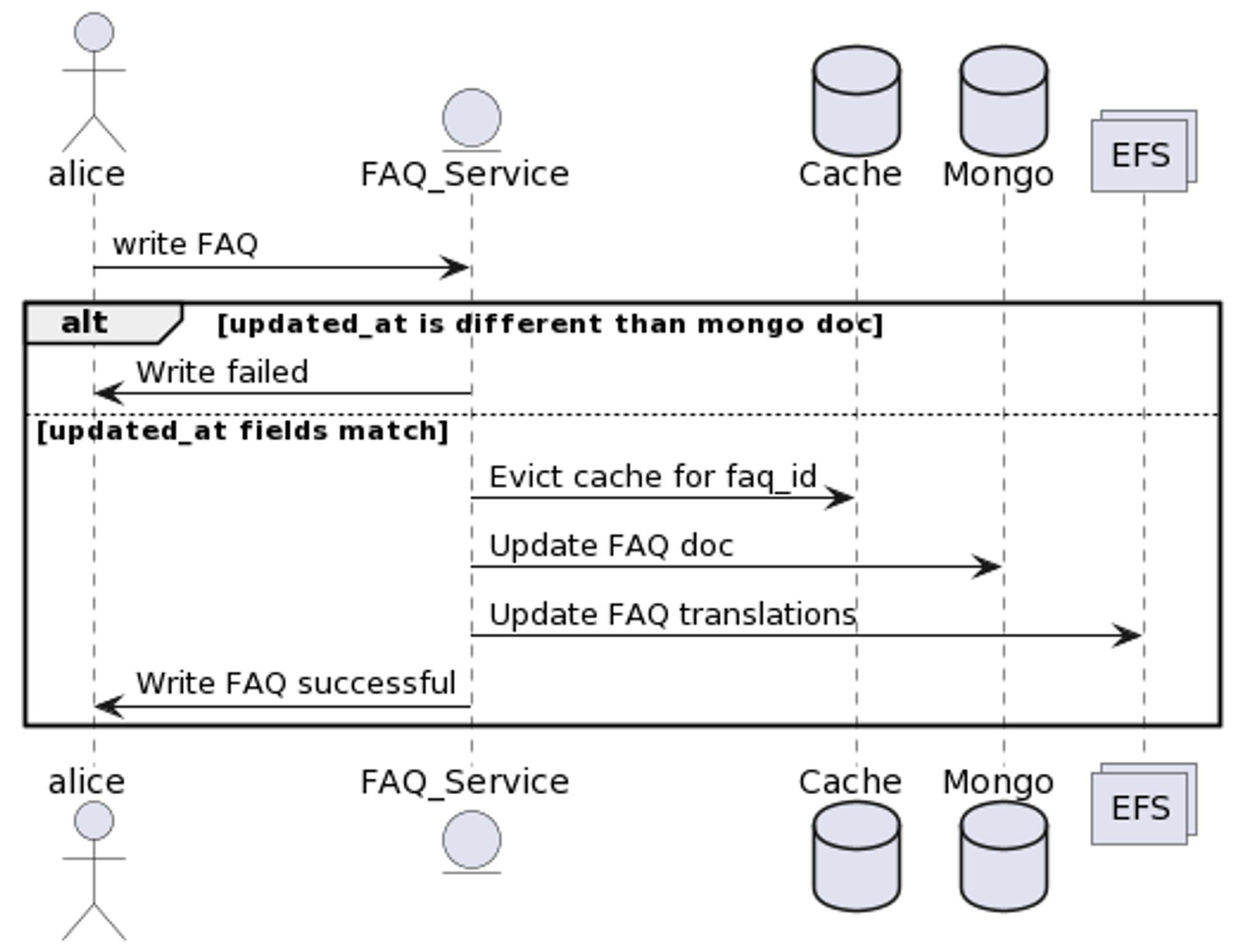

Handling Writes

Filesystems do not inherently ensure atomic writes. For instance, if a write operation to a file, such as en.html, is interrupted due to a process crash, the file may end up in an inconsistent state. Moreover, any read requests during the ongoing write process might retrieve inaccurate data.

To address this issue and guarantee consistent reads and writes, we have implemented a solution based on the compare-and-update approach. This atomic operation is instrumental in synchronizing data between MongoDB and the Elastic File System (EFS).

Our strategy involves using a timestamp, labeled as updated_at, at the application layer. This timestamp ensures that we are updating the same record that was accessed during the initial data read from the database and EFS. Through this method, we effectively prevent data corruption and consistently hold either entirely old or entirely new information. Also, the compare-and-update approach makes sure that files and documents in db are not updated concurrently.

Following are the details of the algorithm:

FAQ service’s HTTP API gets called for updating an FAQ.

FAQ service cancels the write operation if document with

updated_atvalue is not found. An appropriate error code/message is returned in the response to the API call.If the FAQ service finds a document corresponding to

updated_atvalue, it means that it has not been updated in the meantime and there are no chances of corrupting data.Following the above operation, it evicts the cache, updates FAQ document, updates HTML files on EFS, and finally responds with a success message in the API call.

Handling Reads

The aforementioned approach, incorporating the use of the updated-at timestamp during both file and document writes, effectively mitigates concurrency issues during read operations. Let's examine the potential concurrency problem scenarios:

User X initiates a request to read an FAQ.

Simultaneously, User Y requests to write to the same FAQ.

User X reads from MongoDB.

User Y writes to the document, updates the Elastic File System (EFS), and completes the transaction.

User X attempts to read from EFS.

Consequences:

Without the use of the updated-at timestamp, let's consider how this scenario could impact the read operation. User X reads the document from the database at step 3. Meanwhile, User Y writes to the same document and updates files on EFS at step 4. Suppose the modified file is en.html. Now, at step 5, User X reads en.html from EFS. Consequently, User X possesses a document containing mixed data—a blend of older metadata fetched from MongoDB and newer file versions.

Now, let's revisit the scenario where the updated-at timestamp is employed to version the files on EFS. With this approach, each write operation generates a new file on EFS. User X reads the document from the database at step 3, while User Y writes to the same document and updates files on EFS at step 4. Assuming the modified file is en-t2.html, at step 5, User X reads the file for the en language using the updated_at timestamp received in step 2. Therefore, the file fetched is en-t1.html. In essence, the data acquired by User X is not corrupted, as it combines metadata from the database and files from EFS of the same version. This ensures data consistency and prevents the retrieval of mixed or outdated information.

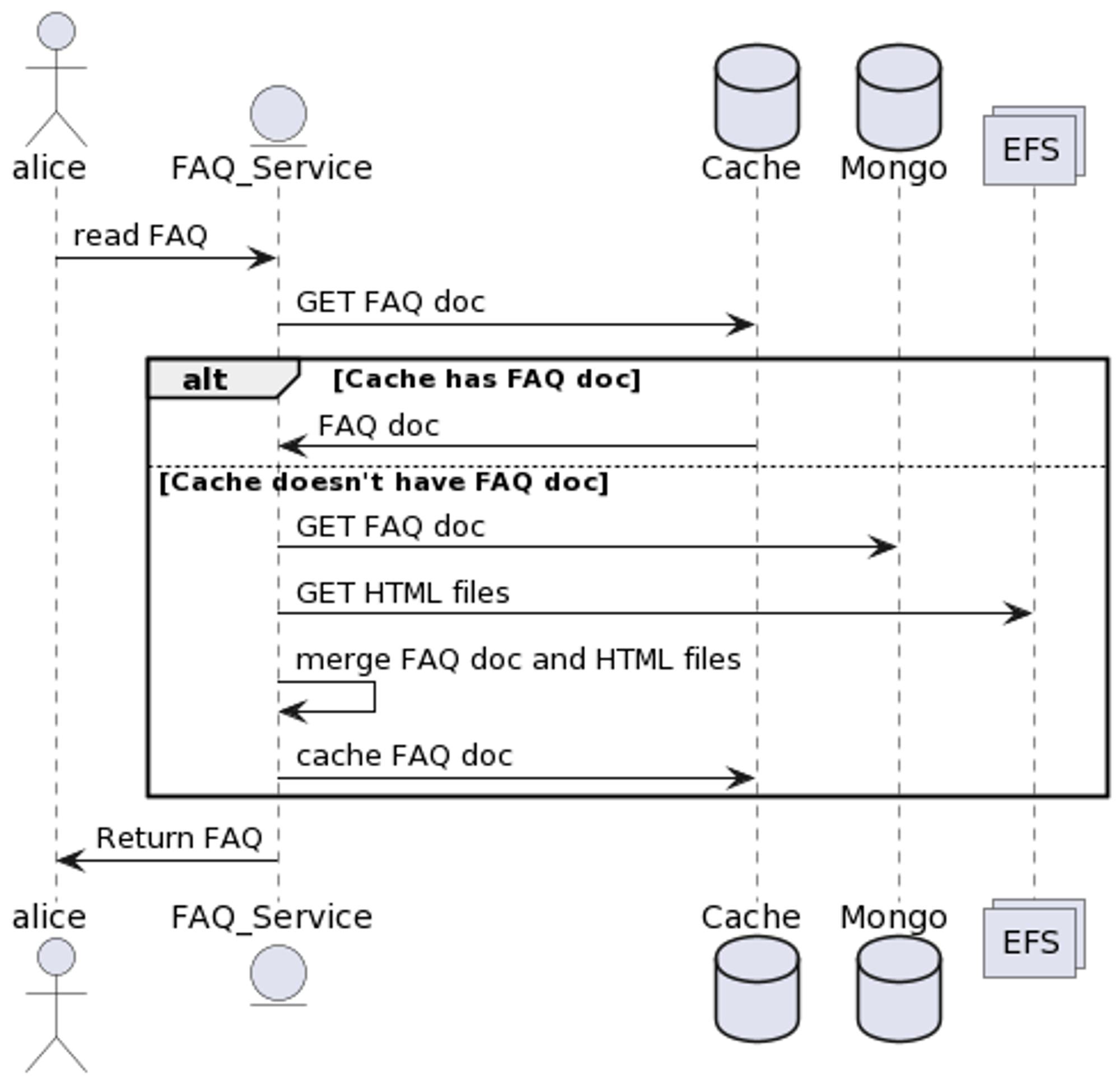

Following are the details of the read algorithm:

FAQ service gets a read HTTP API request

FAQ service first checks if the document corresponding to the FAQ ID is cached in the key-value store.

If the document is found in the cache then it is returned

Otherwise, the FAQ document is fetched from Mongo, files are fetched from EFS. Both results are merged, cached, and returned in the HTTP API call.

Note: It’s an existing architectural decision to evict cache on write paths and cache documents on read paths.

Managing Dangling Files Cleanup

When associating files with the updated_at timestamp, it's inevitable to encounter dangling files that require periodic deletion. This cleanup process can be treated as a scheduled monthly task.

Cleanup process:

Iterate through each FAQ in the

FAQscollection.Access the corresponding FAQ translations stored in the Elastic File System (EFS).

Examine the

updated_attimestamp for the language translation in the FAQ document.Delete all other

<language-code>-<updated_at>.htmlfiles, except for the one that aligns with theupdated_attimestamp in the language translation data of the MongoDB document.

Implementation Provisions

To ensure the smooth execution of this cleanup process, the following provisions should be considered:

Scheduled Execution: Set up a cron job to run this cleanup algorithm every month.

Manual Trigger: Provide a mechanism to manually trigger the cleanup job, offering flexibility in addressing specific cleanup requirements.

Domain-Specific Trigger: Incorporate a functionality to trigger the cleanup job selectively for a particular domain. This feature ensures faster processing and allows tailored cleanup based on specific domain needs.

Operability changes

For every FAQ service node, we need EFS to be mounted on the EC2 instance. This means while spawning a new FAQ service instance, it needs to have EFS mounted on it before the application starts serving the requests.

EFS multi-zone standard setup had good durability guarantees.

Files should be backed up on S3 similar to any other database.

Such data migration is a risky affair. In the next blog post, we'll examine how the whole project was managed and rolled out.